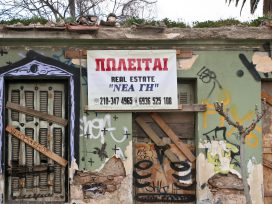

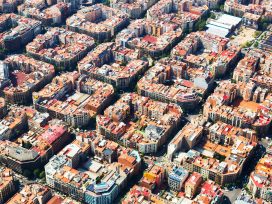

Europe’s housing crisis affects everyone, but is especially a concern among millennials. Unaffordable rents and property prices, as well as rapid gentrification, raise a question about the actual purpose of cities. Read a compilation of our articles on urbanism, housing, and cities.