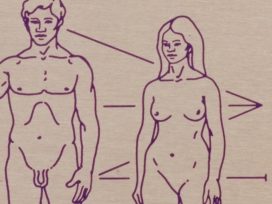

Why was the distinguishing mark of female genitalia erased from NASA’s 1970s image travelling outer space? And will compromised depictions of life on Earth avoid sexist, racist and anthropocentric simplifications by 2036?

AI-generated non-consensual porn is devastating the lives of girls and women. Online images sexualized at the click of a button reveal how unrealistic standard advice for women to exercise caution is. Regulation of AI products that enable sexual violence is a first step. But an ideological and intellectual shift on women’s freedom is needed.

Hiding behind the YouTube profile ‘Woman Shot A.I’, an anonymous user posted videos titled ‘Japanese Schoolgirls Shot in Breast’, ‘Sexy Housewife Shot in Breast’, ‘Female Reporter Tragic End’ and ‘Headshot AI’ from 20 June 2025 until mid-September. The AI-generated content, showing women begging for mercy just seconds before being stabbed, shot through the chest or decapitated, had over a thousand followers and more than 175,000 views. ‘Woman Shot A.I’ brought their fantasies to life using Veo 3, a Google AI Studio tool promoted as capable of ‘delivering enhanced realism …, offering unprecedented control to bring your most ambitious visions to life’.

We’re not talking about the internet underworld here, of rifling through someone else’s dirty underwear, ketamine and ammunition, but video accessible on a global video platform used daily by millions of adults and children. The fact that none of the existing verification mechanisms managed to detect the problem – first in Veo 3, which allegedly blocks all prompts that conflict with Google’s policies and guidelines, and then on YouTube – demonstrates how perfunctory violence against women is taken. The disputed profile was removed only after independent media outlet 404 Media reported on the case and requested a response from YouTube – in other words, only once ‘Woman Shot A.I’ became a potential PR problem. While they were lining their pockets, sexually explicit content and violent material, banned on paper, passed easily under the radar.

AI-produced visuals that have no grounding in reality (I will return to this formulation) are referred to as deepfakes. The first recorded context in which the term was used reveals its original purpose, uncannily close to ‘Woman Shot A.I’s usage: as early as 2017, members of the subreddit ‘r/deepfakes’ pasted the faces of famous women onto existing pornographic material. The panic surrounding AI’s intrusion into every sphere of our lives has largely focused on the political context and destructive potential of a technology that fabricates false histories and spreads hatred, persecuting individuals and specific social groups. Yet few approach with the same seriousness the problem of using AI to humiliate and intimidate women – that is, to produce and disseminate misogyny, through deepfake pornography and the revival of femicidal fantasies, using apps and programmes available at everyone’s fingertips.

It is no coincidence that the aforementioned titles of controversial deepfake content sound like hybrids of crime reporting and Pornhub material. In each of the 27 removed videos, a threatening male figure looms over a terrified woman with his back turned to the audience, a gun in his hands, spraying bullets.

Statistics regularly remind us that fantasies of killing and mutilating the female body are nothing new,1 just as attempts to discredit and control women by associating them with sex and sexuality are nothing new either. The goal remains the same, but the means and methods are now more technologically sophisticated. As a result, anyone with a few dollars on their card and a bit of malicious intent can take revenge on an ex-partner by digitally stripping her using artificial intelligence and sharing her nude images with friends on WhatsApp. All it takes are a few photographs of her, which, in an age of extreme technological dependence, is usually not difficult at all, and voilà. By the time the victim realizes what has happened – if she ever finds out at all – it is already too late.

Pornographic material, like cockroaches, burrows deep into computers, laptops and other electronic devices, into clouds and digital pantries, capable of surviving even a nuclear catastrophe. However much we like to believe that we can distinguish reality from artificially generated content, AI learns and advances at such speed that it is already capable of deceiving even the most technologically literate eyes. In practice, this means that the boundaries of our worlds are becoming increasingly blurred. Even if something did not actually happen, it becomes part of our reality simply by interacting with and consuming it. AI-generated realities have real consequences; victims of deepfake pornography often compare their experience to rape.2

Deepfakes are becoming a tool of blackmail, manipulation and intimidation. In her book The New Age of Sexism, British journalist and activist Laura Bates takes readers to the once sleepy Spanish town of Almendralejo, where in September 2023 more than 20 high-school girls became targets of an AI attack. Their nude images, created using the app ClothOff, began circulating in local WhatsApp groups and online, causing many victims – the youngest was only 11 at the time – to refuse to leave their homes for days. The perpetrators were identified as a group of boys, their peers, who traumatized an entire local generation of girls out of sheer boredom.

But before we start throwing stones at the boys, I believe it is far more important to address the fact that publicly available apps for undressing anyone, especially minors, exist in the first place. The behaviour of these adolescents should not be seen as an isolated incident. Rather their decision to sexualize and humiliate their female classmates should be interpreted in light of a broader social context in which violence against women is routinely presented as something normal – the burden of being a woman, one might say.

Because technology giants feed their machines with information steeped in the dominant values and ideologies of societies from which they originate, technology merely perpetuates existing power relations. This is why, for example, facial recognition systems fail to detect the faces of Black women, and why many undressing apps do not work when fed photographs of men. The most literal example of this ideological mirroring can be found in the design of a new generation of female sex robots which, as we learn from a promotional RealDoll video, will ‘do it all just for you’, making them, in combination with an hourglass figure, white skin and personalised nipples, supposedly perfect partners. One can only hope that the whopping US$11,349.99 price tag for Tanya includes a psychologist.

As with other forms of gender-based violence, education about the horrors of modern technology usually includes a paragraph on how girls and women should be more cautious in digital spaces, watch whom they accept as friends and what kinds of photos they share, because, you know, there are lots of lunatics on the internet. Instead of focusing on perpetrators or on technology companies such as Meta on whose platforms apps for producing deepfakes are regularly advertized, or on Google and YouTube, whose control mechanisms clearly fail to recognize explicit content, blame is once again shifted onto the victims. Why is the only solution we can come up with a proposal to silence women and restrict their freedom of movement, first at night, and now in digital spaces as well?

Bates astutely concludes that the only good thing about AI technology is that it has finally made it clear that victims of revenge pornography were never responsible for the crimes and suffering inflicted upon them:

‘The great irony here is that the very existence of deepfakes directly proves the ludicrousness of victim-blaming. When image-based sexual abuse first emerged, … one of the most common responses to the problem … was the obvious solution that women should stop taking intimate photographs of themselves… And then along came deepfake technology to blow totally out of the water the idea that women who never took intimate photographs of themselves were somehow protected from pornographic abuse. How ridiculous all those police officers and principals and op-eds look now, when any woman, anywhere, regardless of whether she has ever taken such images, can still have naked photos of herself spread all over the internet used to victimize and shame her. While we were so busy policing women, perpetrators gleefully used the time to develop increasingly sophisticated ‘nudifying’ tools.’3

‘Non-consensual porn’, as opposed to the term ‘revenge porn’ already in circulation, acknowledges that the reason why someone might decide to share another person’s intimate content without permission might be more involved than pure revenge. In the Almendralejo case, the desire to gain popularity among one’s peers was paramount. And let’s not forget the profit margins from an industry worth at least US$15 billion, with some recent estimates reaching US$100 billion.

The recent case involving the use of the AI chatbot Grok, a product of Elon Musk’s company xAI, to strip actual adults and minors ultimately confirms the thesis that profit always comes first for technology giants. On New Year’s Day 2026, Musk shared a bikini photo of himself produced using Grok on his social network X, prompting other users to do the same – but not, of course, with their own photos. Only after the media reported a flood of controversial deepfake content on the former Twitter did Musk threaten ‘consequences’ for anyone using Grok to create child pornography. Shortly thereafter, he began charging for the AI content production service. This combined with the announcement by OpenAI CEO Sam Altman that ChatGPT will soon have an adult mode makes it crystal clear that the use of artificial intelligence to fulfil users’ pornographic fantasies, regardless of whether they are innocent or pathological, is being actively encouraged.

All European Union (EU) member states have collectively recognized the dangers of AI technology. For example, in Croatia, since 2022, Article 144.a of the Criminal Code permits the one-year imprisonment of a perpetrator who has made sexually explicit content, extendable for up to three years if the recording was made available to a larger number of people. The question, however, is what this jurisdiction looks like in practice. The boys from Almendralejo in Spain were punished, but this was more the exception than the rule. Although I’m not suggesting that we should be criminally prosecuting even more children, it is not acceptable for perpetrators to walk away unscathed when the bodies of their victims are left at the mercy of unpredictable internet currents, especially as around 50% of victims of non-consensual pornography contemplate suicide.

From videos of cats chasing each other across beds to disturbing images of Donald Trump with Elon Musk’s foot in his mouth, anyone who has not yet deleted social media will tell you that the internet has turned into a cesspool filled to the brim with AI slop. Introducing a stricter regulatory framework is the first step, but only an ideological and intellectual shift can save us from this dystopian nightmare into which we are sinking ever deeper. Of course, it is essential to teach children from an early age how to navigate dangerous digital waters, but it is even more important to teach them empathy and solidarity, so that it would never occur to them one day to use school photographs of their female classmates to create fake pornographic material.

The text was published by Vox Feminae as part of the thematic series ‘A Gender Lens for a More Equal Society’, co-financed by the Fund for the Promotion of Pluralism and Diversity in Electronic Media.

In 2025, 15 women were murdered in Croatia. Eleven of them were killed by their partners (as reported by the Croatian Femicide Watch).

L. Bates, The New Age of Sexism: How the AI Revolution is Reinventing Misogyny. Simon & Schuster, 2025.

L. Bates, 2025, p. 32.

Published 11 February 2026

Original in Croatian

Translated by

Marta Ferdebar

First published by Vox Feminae (Croatian original); Eurozine (English version)

Contributed by Vox Feminae © Lucija Tunković / Vox Feminae / Eurozine

PDF/PRINTSubscribe to know what’s worth thinking about.

Why was the distinguishing mark of female genitalia erased from NASA’s 1970s image travelling outer space? And will compromised depictions of life on Earth avoid sexist, racist and anthropocentric simplifications by 2036?

While book publishing is an ailing industry, children’s books are booming. But political attacks and censorship are also threatening this thriving sector.