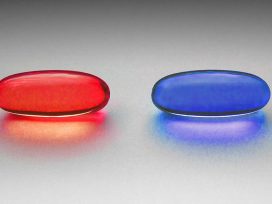

“Every day, people are breaking up and entering into relationships on Facebook. When they do, they play songs that personify their mood. With Valentine’s Day just around the corner, we looked at the songs most played by people in the US on Spotify as they make their relationships and breakups ‘Facebook official’.”

While there has been much public speculation about how the social network Facebook transforms the online behaviour of its members into metadata, relatively little is known about the actual methods used by Facebook to generate this data knowledge. The data-structures modelled by Facebook therefore need to be analysed methodologically. Two areas in particular require attention: first, the positivism of large-scale data analyses; second, the status of theory in the online research carried out by the Facebook data team. How is Facebook to be understood in the context of the “digital turn” in the social and cultural sciences? And how does the methodology underlying Big Data research bear out in concrete data practices?

Facebook’s Data Centre, Prineville, Oregon, USA. Photo: Tom Raftery. Source: Wikimedia

Monitoring the social

What music do people listen to when they fall in love? And what about when they are breaking up? In 2012, the “Facebook Data Team” – made up of information scientists, statisticians and sociologists and led by the sociologist Cameron Marlow – attempted to answer these two questions. By correlating over a billion user profiles (over ten per cent of global population) with six billion songs on the online music service Spotify, they were able to establish a connection between “relationship status” and “taste in music”. Their prognosis about collective consumer behaviour was based on predictions about certain features, obtained via data mining, expressed in a straightforward causal relationship. In February that year, Facebook published two top-ten charts listened to by users who had changed their relationship status. They were called simply “Facebook Love Mix” and “Facebook Breakup Mix”.

By distilling a global diagnosis from their “Big Data” analysis, the “back-end” researchers at Facebook were also making a claim about the future, namely: we know what music a billion users will listen to when they fall in love or break up. Ostensibly motivated by mathematical interest, their prognoses indicate the performative origins of data-based knowledge. Despite the mathematization, computization and operationalization they contain, statements about the future always have a performative power. They are speech acts that can take literary, narrative or fictional forms. Their meanings are not clearly determined, but instead emerge in the space of future possibility as aggregate knowledge. Their consensus-forcing plausibility consists not only in discourses of truth and epistemology, but also in cultural and aesthetic processes of communication and patterns of explanation, drawing together the imaginary, the fictional and the empirical.

The top-ten chart reduces a complex reality to an instantly comprehensible format. It is a popularising narrative with a representational, rhetorical and behaviour-moderating function, intended to make research on future behaviour look like an entertaining and harmless exercise. Futurist epistemology must be convincingly presented in order to be credible, and be theatrically exaggerated and effectively advertised in order to attract attention. Scientific claims about the future therefore contain an inherent moment of prophetic exhibitionism, with which scientists attempt to prove the social-diagnostic benefits of social networks.

Under the pretext of merely collecting and relaying information, online platforms employ various means to obtain interpretational power over users. Often, their demands for data go unnoticed, for example when Facebook’s automatic update mode “What’s going on?” exhorts users to post regular information on their timelines. In business terms, Facebook can be described as a company-controlled social media platform with a primary interest in a user-generated data flow. This it obtains via an interface architecture that provides its users with identity-management functionalities. Facebook members use these to represent themselves in the form of profiles, to network with other users, and to administer their contacts.

The meta-knowledge generated by social networks therefore rests on an asymmetrical relation of power that is concentrated in a technical media infrastructure. This gives rise to a bipolar scheme of “front and back ends”. With its specific processes of registration, classification, taxonomization and rating, Facebook has brought significant changes in the conditions of subjectification in network culture.

Front end / Back end

Facebook’s social software generates personal data using standardized e-forms. By completing these, subjects voluntarily engage in procedures of self-description, self-administration and self-evaluation. These electronic forms – tabular grids consisting of logically pre-structured texts with slot and filler functions – enable the inventorization, administration and representation of the data material. The graphic form of the grid and the uniformity of the fields establish standards of informational data processing, transforming personal modes of representation and narration into uniform blocs of information at the front-end, which are then submitted for formal-logical processing by the database system at the back end.

As soon as future Facebook users open an account, they submit themselves to a complex procedure of knowledge acquisition. When registering for the first time, users are required to enter their personal details into a standard template consisting of document-specific data fields, which simultaneously provide orientation. The representation of profiles in tabular form signalizes clarity for users and provides a simple grid for personal descriptions that can quickly be clicked through by other users. The electronic data page thus creates transparency and simplicity while standardizing decision-making.

Facebook’s pre-structured applications impose formal strictures on the acquisition and representation of data. In the case of e-forms, these strictures apply primarily to authors. Hence, certain entries in e-forms can be made only in a particular way. Graphic authority consists both of qualitative and quantitative criteria, not only dictating the content of categories of self-description, but also demanding that forms be filled out completely before the process be completed. In order to be locatable in the grid of the e-form, linear and narrative knowledge must be broken up into informational units. These form-immanent rules underlie the authority of the e-form. Nevertheless, it is an authority of graphic and logical structure that is fragile and instable, always susceptible to subversion by the authors of forms (for example through the creation of fake profiles). The cultural embedding of authors in historically contingent and socially diverse practices of reading, writing, narration and perception relativizes the explicit commands, instructions and directives of the regulatory framework immanent to the e-form.

There is, then, a multitude of tactical possibilities for subverting the form-immanent dictate. Subversive practices of form-filling aimed at producing contradictory data entries call into question the entire scheme of the electronic form. However the use of forms entails a further aspect – the silent acquiescence to the form as appropriate medium with which to record and represent data and information. The authority of the electronic acquisition and representation of knowledge depends on the readiness of end-users to recognize the use of electronic forms as neutral, self-evident and self-explanatory. Underlying this kind of acceptance are historical habits of reading and writing (e.g. book-keeping and procedures of examination and testing) that allow electronic forms to be recognized as conventional and common means for empirical data evaluation. The authority of electronic forms rests less on the institutional authorization of the individual document as it does on cultural acceptance of the form per se. The form’s credibility is essentially based on historical experience, in other words: recognition of the form’s form.

E-forms do more than simply provide a context for information. They introduce a grid for the registration of personal features, enabling profiles to be compared and assigned to the parameters of particular search terms. The interrogatory protocol occupies a position of authority over the provider of information; in other words, the determination of sequence and type of question sets out a communicative framework that shifts authority to the realm of format. The electronic form can only develop authority if there are strict rules determining how it is used, and if breaches of those roles are sanctioned. The e-form’s formal stringency guarantees its ability to transfer information in a predictable manner. Its immanent authority is not, however, stable; rather, it is susceptible to cultural practices in the sphere of use.

It is the “non-compulsory” data-fields that allow freedom for personal design, inviting users to create individual lists and tables and providing the opportunity for personal confessions and intimate revelations. These “free” and “non-compulsory” spaces of communication on Facebook provide a highly reliable motivation for self-initiated knowledge production. The retreat of the grid to the informal realm does not per se disorganize the process of knowledge registration. On the contrary, the individualization of data suggests a “de-centralized”, “non-authoritarian” and ultimately “truthful” praxis of knowledge evaluation and in many cases “revives” the readiness of subjects to provide information about themselves.

In the reception mode of its members, Facebook marks the emergence of a new culture of subjectivity, one formed primarily through the acquisition of cultural and social distinction. “Definitions of belonging and strategies of exclusion, and the trading of values and norms as well as the relations of power, not only define the respective ‘outer borders’ of the same, but also the negotiation of its content.” It goes without saying that mechanisms of distinction are one of the basic elements of social organization. However, with the rise of company-controlled social media such as Facebook, distinctions become computerized, generated using algorithms. The ways in which distinctions are offered, selected, distributed and archived therefore remain incomprehensible for Facebook users. The computerized processing of user-generated data is carried out at the back end, in an inaccessible computation space behind the graphic user-surface, reserved exclusively for internal data use. Users have only a very vague conception of the kinds of information that are collected and how they are used. Thus, while personalization is indeed based on the information provided by users, the process of prediction and provision of content, based on past behaviour, is a “push” technology that systematically excludes users from participating and exerting influence.

How, then, does Facebook calculate the futures of its members? What methods of registration, computation and evaluation does it employ to generate its prognoses? How are these methods able to model the behaviour of its users?

The Happiness index

The evaluation of the mass data generated by the social networks is increasingly conducted by so-called “happiness research”, a major field within big-data prognosis. However the social-economic interest in happiness largely excludes the academic public sphere. Influential theorists have warned of a “digital divide”, in which knowledge about the future is unequally distributed, potentially leading to imbalances of power between researchers inside and outside networks. Lev Manovich argues that the limitation of access to social data creates a monopoly in the government and administration of the future: “Only social media companies have access to really large social data – especially transactional data. An anthropologist working for Facebook or a sociologist working for Google will have access to data that the rest of the scholarly community will not.”

This inequality cements the position of the social networks as computer-based media of control, whose knowledge is acquired via a vertical and one-dimensional web of communication. The social networks enable a continual flow of data (digital footprints), which they collect and organize, establishing closed spaces of knowledge and communication for experts, who distil the data into information. Knowledge about the future thus passes through various technical and infrastructural levels that are organized hierarchically and in pyramidal form. “The current ecosystem around Big Data creates a new kind of digital divide: the Big Data rich and the Big Data poor. Some company researchers have even gone so far as to suggest that academics shouldn’t bother studying social media data sets – Jimmy Lin, a professor on industrial sabbatical at Twitter, argued that academics should not engage in research that industry ‘can do better’.” Clearly, alongside the de facto technical-infrastructural isolation of knowledge about the future, strategic decision-making is also located at the back end rather than in peer-to-peer communication. While peers are able to falsify results, create fake profiles and communicate nonsense, their limited agency prevents them from moving beyond these tactics and actively shaping the future.

Why, then, has happiness research become so important for the shaping of future knowledge? Insofar as it rationally interprets happiness as an individual benefit, and by adding up aggregated declarations of happiness calculates social wellbeing, it draws on the Benthamite principle of the “Greatest Happiness” – in which the greatest possible good consists of the greatest possible happiness for the largest possible number of people. Since 2007, a major example of futurological prophecy has been the “Facebook Happiness Index”, in which users’ mood is evaluated on the basis of an analysis of the word index in the status messages. On the basis of data provided by status updates, researchers calculate the so-called “Gross National Happiness Index”.

The sociologist Adam Kramer, together with other members of the Facebook Data Team, developed the Happiness Index by evaluating the frequency of positive and negative words in the self-documentary format of the status messages. These “self-reports” were contextualized with individual users’ general sense of satisfaction (“convergent validity”) and with data curves on days on which various events occupied the media (“face validity”). In other words, the binary opposition “happiness” / “unhappiness” and “satisfaction” / “dissatisfaction” has been developed as an indicator of collective mentality, in turn based on collective shared experiences and specific moods. So far, the analysis of mass data obtained through social networks has enabled the calculation of the emotional state of twenty-two countries. Using the scientific correlation between subjective emotions and population-statistical knowledge, the “Happiness Index” acts not only as an indicator of “good” or “bad” government, but also as the criteria for a possible adjustment of the political to the perceptual processes of the social networks. In other words, the “Happiness Index” represents a further instrumentarium both of scientific expansion and statutory-administrative decision making.

Internet bio-surveillance

In the era of Big Data, the status of social networks has changed radically. Today, they increasingly act as gigantic data collectors for the observational requirements of social-statistical knowledge, and serve as a prime example of normalizing practices. Where extremely large quantities of data are analysed, it now usually entails the aggregation of moods and trends. Numerous studies exist where the textual data of social media has been analysed in order to predict political attitudes, financial and economic trends, psycho-pathologies, and revolutions and protest movements. The statistical evaluation of Big Data promises a range of advantages, from increased efficiency in economic management via measurement of demand and potential profit to individualized service offers and better social management.

Internet bio-surveillance, or “digital disease detection”, represents a new paradigm of public health governance. While traditional approaches to health prognosis operated with data collected in clinical diagnosis, Internet bio-surveillance analysis uses the methods and infrastructures of health informatics. In other words, it collects and processes unstructured data and information from various web-based sources in order to track changes in health-related behavior. The two main aims of Internet bio-surveillance are: (1) the early detection of epidemics and biochemical, radiological and nuclear threats; (2) the implementation of strategies and measures of sustainable governance in the fields of health promotion and health education.

Biometric technologies and methods are finding their way into all areas of life, changing people’s daily lives. It is above all sensor technology, biometric recognition process and the general tendency towards the convergence of information and communication technologies that stimulate Big Data research. The conquest of mass markets through sensor and biometric recognition processes can partly be explained by the fact that mobile, web-based terminals are equipped with a large variety of different sensors. It is through these that more and more users come into contact with sensor technology or with the measurement of individual physical characteristics. Due to more stable and faster mobile networks, many people are permanently connected to the Internet, providing connectivity with an extra boost.

With the development of application software for mobile devices such as smartphones and tablet computers, the culture of bio-surveillance has changed significantly. These new generation apps are strongly influenced by the dynamics of bottom-up participation. By means of “participatory surveillance”, bio-surveillance increasingly becomes an area for the open production of meaning, providing comment functions, hypertext systems, ranking and voting procedures. Algorithmic prognosis of collective behaviour therefore enjoys increasingly high political status, with the social web becoming the most important data-source for knowledge on governance and control.